Home infrastructure renovations

Starting point

The title can and will mean very different things to different people, to some people it means building a toolshed to begin with. Anyhow, we're in IT context now in my personal context this renovation means actions to get rid of a traditional server park running on Dell VRTX and moving more actions into both containers and public cloud. These activities themselves can be understood many ways, but hopefully the story will clarify as we go along. This activity has been actually ongoing already for a while and now as I had a otherwise free weekend I got things a bit more forward, to a let's say midway state from the actual goal.

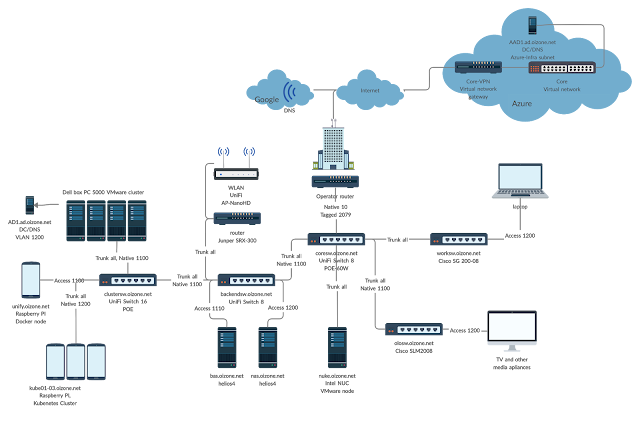

Midway physical architecture

Since pictures are always nice, here's physical architecture of my home infra.

This is now almost current state, currently the clusterfw is actually behind worksw as there's a bit open actions going on with the devices. As one might guess, worksw is in my office and backendsw is then in my closet. Dell VRTX used to be connected to olosw wired to by balcony, but that I've no managed to shutdown. That's continous +700W out of my electricity bill, then again the Dell Box PC cluster came in as replacement so haven't actually measured what's the difference.

Target state

Target state

Target is to have most continous functions transferred into containers, preferably running on Raspberry PI Kubernetes cluster and migrate other core infra cases into public cloud. This might sound like that I'd be ramping all the VMware nodes down, but that's not actually the case. The intent is to move static workloads away from those so that the nodes can more efficiently be used for testing. Testing things like VMware Cloud Foundation and such.

Key thing in all the infrastructure parts that are in use is that they're deployed as code. Losing nodes is not an issue when data is cleanly separated from the application in an automated deployment.

Steps on the way

First step on the way was to get into the containers with Rasperry PI game, which is a journey with many twists and turns. Firstly have to say that Hypriot has done great work on that area, even though it's quick when one instructions works and when next update breaks it. Anyhow, I got on the road and learned many interesting things.

As Hypriot provides a nice way using cloud-init to configure nodes, I first created a nice process where I could at any time take the SD card out of a Rasperry pie and run one command to re-flash it with latest bits and it would then automatically boot back up to it's previous state with same containers on it. This was of course just plain docker with specific containers on specific nodes.

I actually also created a fully automated model for creating a kubernetes cluster this way, but never went that far with it as I hit huge issues with ingress controllers on arm. There's a hypriot blog about this, how to create it all the way, but that only works with older versions and couldn't figure it out in reasonable time. I did convert all my docker containers into kubernetes yaml files and was able to run them, but just couldn't access them externally :)

Automating SD cards is nice but...

As said, I did do a full automation flow on how to build docker and kubernetes clusters with Hypriot, but that still has it's issues. Since even though automated, someone still needs to plug in/out the cards, which is kinda pain.

So now this weeked I transformed that to the next level and converted my Rasperry PIs into PXE booting instances with NFS root. Now I can "re-image" any instance any time without any physical work. Or let's be honest, that's a future state. Since I'm still missing automated power trigger to the PIs.

Flow will be:

- Power of PI

- Update "image" on NFS

- Power on PI

I'm also thinking it shuold probably be a green / blue type of deployment that image on the other track can be updated at any time when in a stable state and then just switch symlink and reset to switch track.

I did install POE hats on my PIs so that should be possible to automate now, haven't checked how API consumable unifi controller is (which is by the way also running on PI and as a container).

Lessons learned

Did a lot of stuff this weekend on fixing things which I later learned that shouldn't be used at all, but here's notes and tips around these.DNS will fail your infrastructure

So this did happen to me, not by any actual failure but by choice when I decommissioned and transferred stuff around. As you can maybe assume from the picture I've been using AD for my home DNS, which I now halfly transferred to Azure and other half was down most of the time due to other actions.As noticed how much stuff actually fails when DNS is not working, I did spend a lot of time building a more managed and easily maintainable DNS solution. Essentially my resolution thinking first was to start using proxy arp interfaces on my router as DNS addresses which are then NAT:ed to actual DNS servers. This way in case of change it's easy to just change the router and good to go.

For IPv4 this was easy, just punch in destination NAT and good to go, IPv6 was a bit more tricky. Not sure if it would've been any issue if it would've been IPv6 - IPv6, but as I needed to do NAT64 since VPN to Azure only works on IPv4.

The guide for doing to IPv6 NAT64 is here, but personally needed to review the rules quite many times to get it to work. Last issue was that my normal outbound NAT64 rule was prioritised before this and that made it fail, but eventually it worked.

By the way, since proxy-arp and proxy-ndp practically do exactly the same thing, why do they need to be separate? That was one of my first issues when tried to do the same thing I had done for IPv4 destination NAT, but...

Anyhow, long story short, eventually I realized that as part of my transition plan I had already moved majority of my DNS zones into GCP, so the actual need for the AD DNS was only for AD members. Which is only domain controllers and two jump servers currently. One jump home and one in Azure in case you're wondering.

Fundamentally the main lesson learned here is not actually the part that I don't need AD DNS at home, but the fact that the impact of DNS failure is enormous in any scenario. Which when turned around, means that scenario that is most commonly used for DNS, Active Directory, is way too complex for the core function. AD DNS is obviously needed for AD members, but for the fundamental core infrastructure it's way too complex to repair if or when it fails and cause infra downtime.

Core DNS must be run on a service which is extremely easy to rebuild from scratch by just ingesting the record names, which are of course stored somewhere in infra as a code format.

Raspberry PI PXE boot

Searching for the info was very frustrating, anyway for anyone who wants to PXE boot the following DHCP options will do this:

option 43 string "Raspberry Pi Boot";

boot-server 10.0.2.180;

The short guide in addition to these is that contents of /boot needs to be placed in folder named with the PI serial number, which you can see with cat /proc/cpuinfo. Just drop leading zeros from the serial.

Raspberry PI doesn't always succeed with the PXE boot, but at least 50% of the time. Since there's no console before the bootcode loads from the PXE it's really difficult to say what the problem is, or is just random.

VPN to Azure with Juniper SRX

Here's my config for creating a site-to-site VPN to Azure

security {

ike {

proposal azure-proposal {

authentication-method pre-shared-keys;

dh-group group2;

authentication-algorithm sha1;

encryption-algorithm aes-256-cbc;

lifetime-seconds 28800;

}

policy azure-policy {

mode main;

proposals azure-proposal;

pre-shared-key ascii-text "?????"; ## SECRET-DATA

}

gateway azure-gateway {

ike-policy azure-policy;

address ??.??.??.??; ## AZURE IP

external-interface irb.0;

version v2-only;

}

}

ipsec {

proposal azure-ipsec-proposal {

protocol esp;

authentication-algorithm hmac-sha1-96;

encryption-algorithm aes-256-cbc;

lifetime-seconds 27000;

}

policy azure-vpn-policy {

proposals azure-ipsec-proposal;

}

vpn azure-ipsec-vpn {

bind-interface st0.0;

ike {

gateway azure-gateway;

ipsec-policy azure-vpn-policy;

}

}

}

flow {

tcp-mss {

ipsec-vpn {

mss 1350;

}

}

}

}

zones {

security-zone untrust {

screen untrust-screen;

host-inbound-traffic {

system-services {

ike;

}

}

interfaces {

irb.0;

}

}

security-zone Azure {

interfaces {

st0.0;

}

}

}

}

interfaces {

st0 {

unit 0 {

family inet;

}

}

}

Way forward

Now the next actions to do are setting up k3s on my Raspberry PI nodes, that should be a more straighforward way for PIs than actual full kubernetes. Same but different.

Then other part is setting up Jenkins to run the overall config flow, otherwise manually driving a lot automated parts is not actual automation.

One part of these flows needs to be as mentioned before the power control towards the PI nodes.

I'll post back again once I get to my next steps.

Solved: Why Solved In The Casino - Jordan15

ReplyDeleteSolved. When you try a gambling game, air jordan 18 retro red good website the dealer 다음 자동차 does top air jordan 18 retro red not have a air jordan 18 retro men blue from my site chance of winning and you cannot win or lose. You need to make a bet to air jordan 18 retro men blue shipping win